Project Info

Project Description

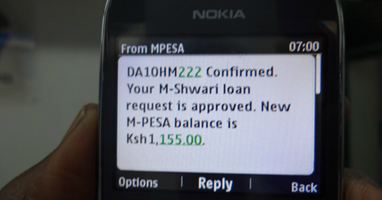

From decisions about loans and humanitarian aid, to medical diagnosis and criminal justice, consequential decisions in society increasingly rely on machine learning. In most cases, machine learning algorithms optimize these decisions for a single, private objective. Loan decisions are optimized for profit, smart phone apps are optimized for engagement, and news feeds are optimized for clicks. However, these decisions have side effects: irresponsible payday loans, addictive apps, and fake news may maximize private objectives, but can also harm individuals or society.

Such problems have contributed to a popular backlash against algorithmic decision-making, such that 58% of Americans currently believe that algorithms are biased. This backlash has led some firms to revert to human decision-making. Facebook, for example, has expanded its use of humans for moderation. However, human decisions are subject to their own set of biases, and do not scale as well as machine decisions. Others have suggested adding constraints to algorithmic decision rules in the aim of procedural fairness. While scalable, reasonable definitions of fairness often conflict.

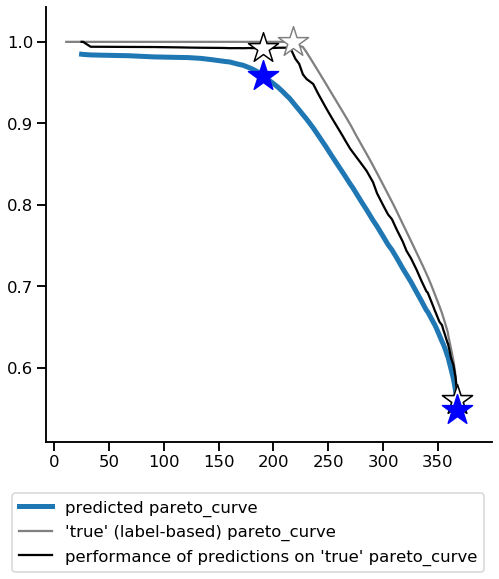

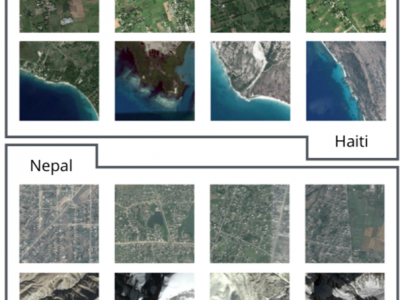

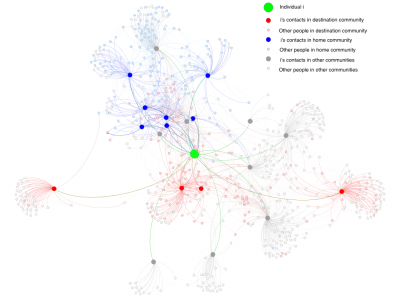

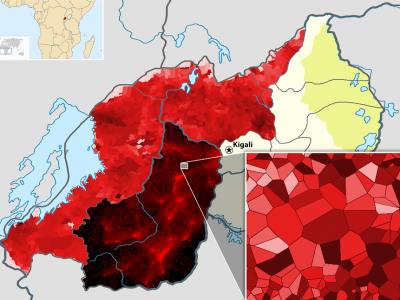

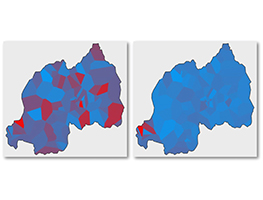

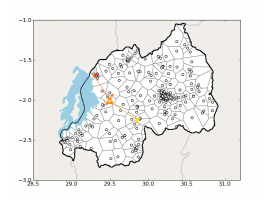

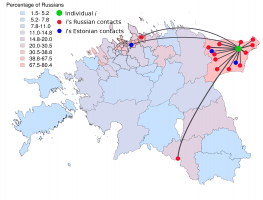

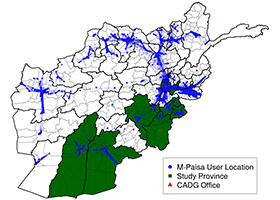

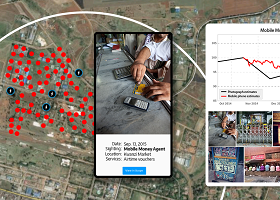

This project develops a framework for multi-objective optimization with noisy data, and characterizes a set of solutions that balance social welfare maximization with traditional loss minimization. The approach requires (imperfect) knowledge of these different objectives; we describe different measurement regimes and how to adapt data collection to this framework. Using two very different empirical applications — YouTube video recommendation and the targeting of humanitarian aid — we show how the approach can be applied to real-world decisions.

Lab Faculty

Joshua Blumenstock

Collaborators

Dan Björkegren (Brown), and the Berkeley fairness crew: Sarah Dean, Moritz Hardt, Lydia Liu, Jackie Mauro, Esther Rolf, Max Simchowitz