Project Info

Project Description

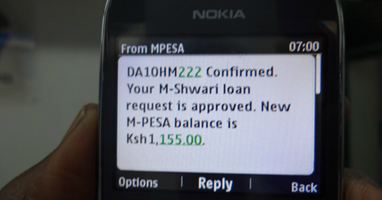

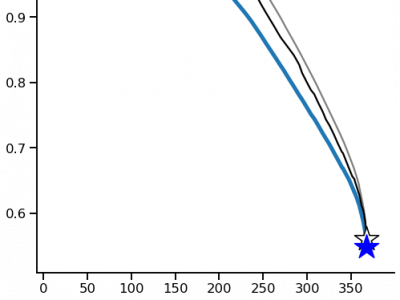

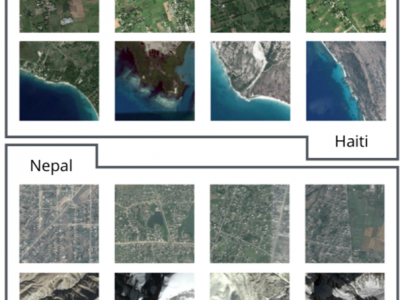

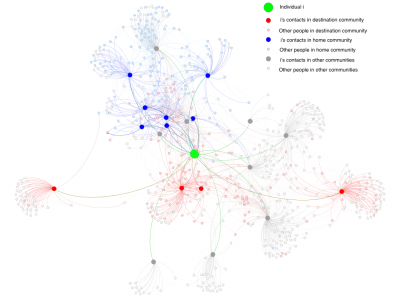

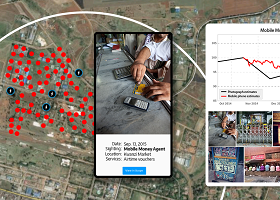

An increasing number of decisions are guided by machine learning algorithms. An individual’s behavior is typically used as input to an estimator that determines future decisions. But when an estimator is used to allocate resources, individuals may strategically alter their behavior to achieve a desired outcome. This paper develops a new class of estimators that are stable under manipulation, even when the decision rule is fully transparent. We explicitly model the costs of manipulating different behaviors, and identify decision rules that are stable in equilibrium. Through a large field experiment in Kenya, we show that decision rules estimated with our strategy-robust method outperform those based on standard supervised learning approaches.

Lab Faculty

Joshua Blumenstock (PI)

Collaborators

Daniel Bjorkegren (Brown)

Status

Ongoing